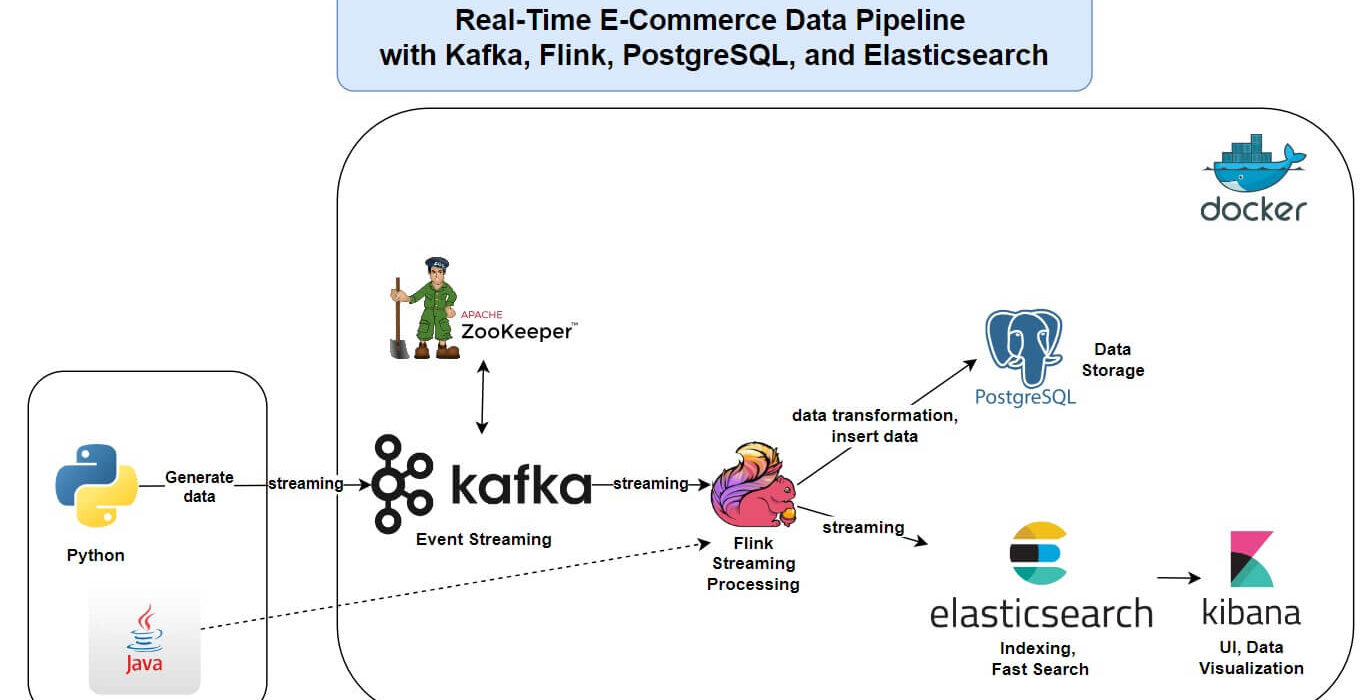

This project demonstrates the development of a real-time data pipeline for e-commerce sales analytics using Apache Kafka, Apache Flink, PostgreSQL, and Elasticsearch. The pipeline ingests high-throughput transaction data, processes it for real-time insights, and stores it for querying and visualization, addressing needs such as inventory optimization and fraud detection.

- Technologies Used: Apache Kafka for event streaming, Apache Flink for stream processing, PostgreSQL for structured data storage, and Elasticsearch for fast querying and analytics.

- Key Features:

- Data Ingestion: Kafka handles real-time transaction data from e-commerce platforms.

- Stream Processing: Flink performs transformations, including parsing JSON, filtering invalid records, and aggregating data for actionable insights.

- Data Storage: Processed data is stored in PostgreSQL for structured querying and indexed in Elasticsearch for efficient search and analytics.

- Deployment: Docker and Docker Compose ensure containerized, scalable deployment.

- Implementation: A Python script generates and sends transaction data to Kafka topics. Flink consumes this data, applies transformations, and channels it to PostgreSQL and Elasticsearch. The pipeline supports integration with tools like Grafana for visualization and can be deployed on Kubernetes for scalability.

For more details, refer to the full article: Building a Real-Time E-Commerce Data Pipeline.

Code: https://github.com/shj37/flink-kafka-elasticsearch-ecommerce-java