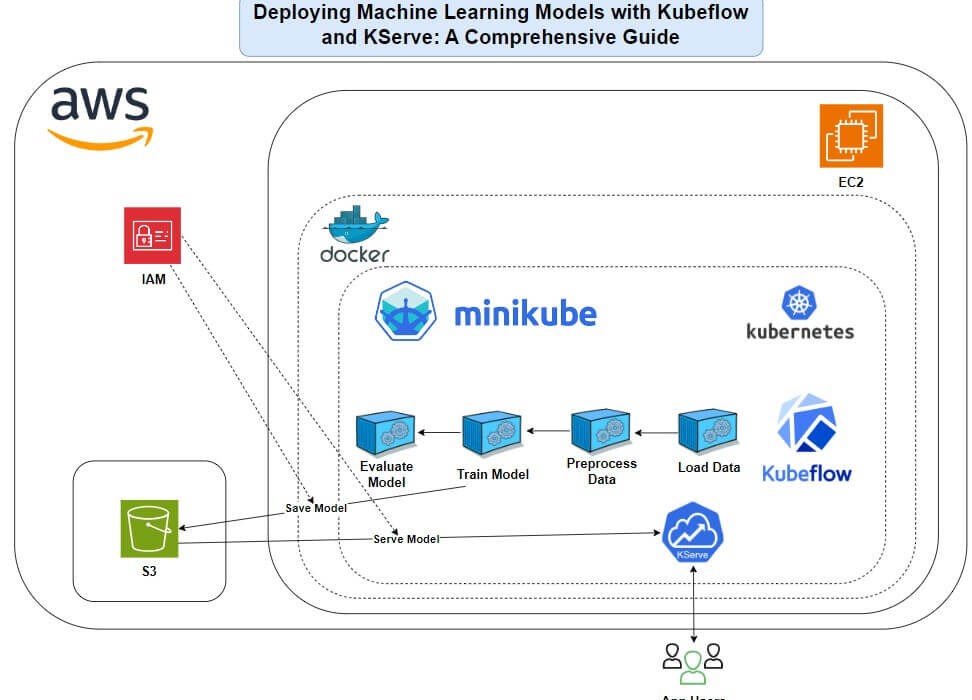

Purpose:

Set up an automated, scalable ML workflow using modern MLOps tools.

Technologies Used:

- Kubernetes (Minikube)

- Kubeflow Pipelines

- KServe

- AWS (EC2, S3)

- Python, Docker

Key Tasks:

- Created an ML pipeline covering data prep, training, and serving.

- Linked AWS services for model storage and secure access.

- Deployed the model with KServe for scalable inference.

Skills Applied:

- MLOps and pipeline automation.

- Cloud and container management.

- Secure, scalable ML deployment.

Result:

A working ML deployment on Kubernetes and AWS, built for efficiency and repeatability.

Link: Deploying Machine Learning Models with Kubeflow and KServe: A Comprehensive Guide | by Shijun Ju | Jun, 2025 | Medium

Code: shj37/Minikube-Kubeflow-ML-KServe-AWS-Project

All credit to iQuant for the original project design and code.