Detailed walkthrough at Medium.com: https://medium.com/@jushijun/building-an-incremental-data-pipeline-with-dbt-snowflake-and-amazon-s3-e8bee58e69d7

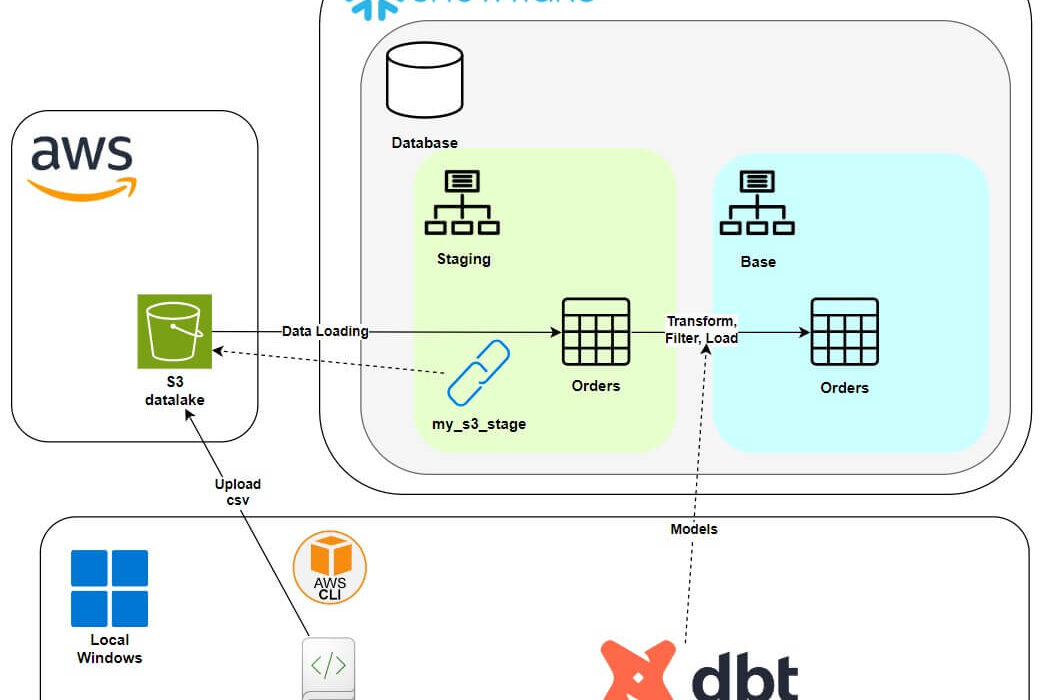

In this project, I developed a scalable data pipeline integrating Amazon S3, Snowflake, and dbt to efficiently manage and transform order data. The pipeline generates synthetic order data, stores it in S3, stages it in Snowflake, and uses dbt to perform incremental loads based on CDC timestamps, ensuring optimal performance by processing only new or updated data.

Key Achievements:

- Configured AWS CLI and Snowflake for seamless data transfer.

- Wrote Python scripts to generate and upload realistic order data to S3.

- Implemented dbt models for data transformation and incremental loading.

- Validated the pipeline’s ability to handle both new and historical data correctly.

This project highlights my skills in designing efficient data pipelines using modern data engineering tools and cloud services.