Project description in details at Medium.com:

- Part 1: Environment Setup and Ingestion https://medium.com/@jushijun/building-an-end-to-end-real-time-streaming-data-pipeline-with-azure-part-1-setup-and-ingestion-94f4fef4e05c

- Part 2: Data Ingestion with Azure Functions https://medium.com/@jushijun/building-an-end-to-end-real-time-streaming-data-pipeline-with-azure-part-2-data-ingestion-with-71ae343d1443

- Part 3: Data Loading, Reporting, and Real-Time Alerts https://medium.com/@jushijun/building-an-end-to-end-real-time-streaming-data-pipeline-with-azure-part-3-data-loading-75c084faa2c1

Description

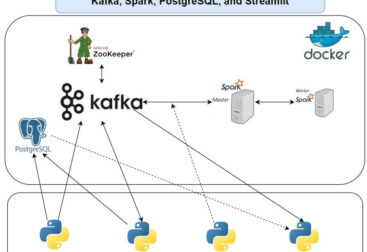

This project showcases the development of an end-to-end real-time streaming data pipeline using Microsoft Azure services. The objective was to ingest weather data from a public API, process it in real-time, and deliver actionable insights through visualizations and alerts, all while prioritizing cost efficiency and security. By leveraging a suite of Azure tools, I created a robust solution for streaming, transforming, and analyzing weather data, demonstrating my expertise in cloud-based data engineering.

Key Components

- Azure Databricks: Used for data ingestion and transformation of weather data.

- Azure Functions: Enabled serverless, event-driven ingestion of streaming data.

- Azure Event Hubs: Facilitated real-time event streaming with high throughput.

- Azure Key Vault: Ensured secure management of API keys and connection strings.

- Microsoft Fabric: Processed and loaded data into a KQL data warehouse for analysis.

- Power BI: Provided interactive data visualizations and real-time reporting.

- Fabric Activator: Monitored weather alerts in eventstreams, automatically sent email alerts to users.

Project Phases

- Environment Setup

- Configured a resource group and provisioned Azure resources: Databricks, Functions App, Event Hubs Namespace, Key Vault, and Fabric service.

- Secured weather API keys from WeatherAPI.com for data ingestion.

- Data Ingestion

- Implemented dual ingestion methods using Azure Databricks and Azure Functions to fetch weather data and send it to Event Hubs.

- Integrated Azure Key Vault for secure access to secrets, enhancing security.

- Cost Analysis and Architectural Decisions

- Analyzed service costs using Azure’s pricing calculator and optimized resource configurations (e.g., selecting Consumption tier for Functions, Basic tier for Event Hubs) to balance performance and expense.

- Event Processing and Loading

- Utilized Microsoft Fabric to process streaming data from Event Hubs and load it into a KQL data warehouse after transformations, enabling efficient querying.

- Data Reporting

- Developed a Power BI report to visualize weather data trends, with auto-refresh set to every 30 seconds for real-time updates.

- Configured real-time alerts in Fabric to notify users of significant weather events.

- End-to-End Testing

- Conducted thorough testing to validate the pipeline’s functionality, from data ingestion to reporting, ensuring reliability and accuracy.

Challenges and Solutions

- Challenge: Securely managing API keys and connection strings.

Solution: Implemented Azure Key Vault to store and access secrets securely. - Challenge: Maintaining cost efficiency in a cloud environment.

Solution: Evaluated pricing tiers and selected cost-effective options without compromising performance. - Challenge: Processing real-time streaming data efficiently.

Solution: Leveraged Azure Event Hubs and Fabric for seamless streaming and transformation.

This project highlights my ability to design and implement scalable, secure, and cost-effective data engineering solutions in Azure, with a focus on real-time data processing and actionable insights.